What Is Frictionless Design and Why It Matters More Than Ever in Ethical AI

Frictionless design refers to user experiences that are effortless, intuitive, and often invisible. Examples include one-click ordering and face ID; interfaces that eliminate interruption, hesitation, and cognitive load.

In AI, frictionless has evolved from a design principle into a philosophy with sweeping psychological and ethical implications, recalibrating core aspects of human experience.

Below is a deeper look at what’s transforming this UX trend into a governance issue…

The Future of Friction: Jung meets Cognitive Architecture

The smoother the interface, the more likely complex behavioral nudging is beneath it. Frictionless environments make users more passive, less aware, and more accepting of predefined choices. That’s not neutrality—that’s design and at times, bypassing the right to making a choice.

Let’s use a Jungian lens to add additional depth to this concept: Carl Jung emphasized individuation—a lifelong process of integrating unconscious and conscious parts of the self. Enlightened moments and self-discovery come after periods of deep questioning, introspection, and so forth; all of which are friction-filled, and not always to our liking. Even plants become heartier and bloom increasingly when exposed to stress.

AI systems that remove friction may also remove the inner tensions that spark growth necessary for psychological integration. A question remains to understand what happens when systems become too sleek and automate the need for discernment—will they also suppress the conditions necessary for personal development, individuation, and resilience?

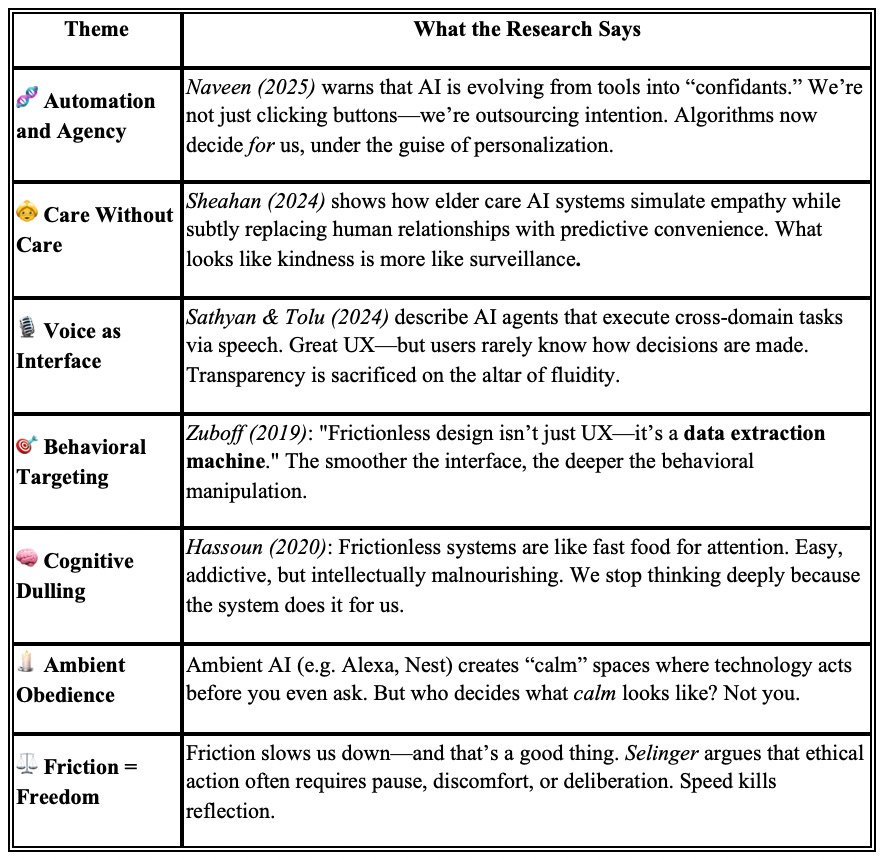

The Latest Research on Frictionless Systems + AI

The following table highlights research on cognitive, relational, and ethical shifts occurring as a result of frictionless design:

Questions for Builders and Ethicists

As we enter an age where design is driven by prediction, ask:

• What kind of cognitive and moral environments are we designing?

• Is friction being removed—or reallocated to the user without their knowledge?

• How might we build AI systems that encourage participation, reflection, and agency—not just passive optimization?

Sources

Dwork, C., Hardt, M., Pitassi, T., Reingold, O., & Zemel, R. (2012). Fairness through awareness. Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, 214–226.

Naveen, P. (2025). The tyranny of algorithmic personification and why we must resist it. AI & Society.

Sheahan, J. (2024). Navigating mediated kinship and care in our aging futures. Anthropology & Aging.

Sathyan, S. T., & Tolu, T. O. (2024). Privacy-Layered Web3 Agents. CryptoCompare.

Zuboff, S. (2019). The Age of Surveillance Capitalism. PublicAffairs.

Hassoun, N. (2020). The Ethics of Attention Manipulation. Ethics and Information Technology, 22(4), 261–273.

Weiser, M., & Brown, J. S. (1997). The Coming Age of Calm Technology. Xerox PARC.

Selinger, E. (2018). Re-engineering Humanity. Cambridge University Press.